New preprint: Inference with Artificial Neural Networks on BrainScaleS-2

16 July, 2020, by Michael Schmuker

By Electronic Vision(s), Heidelberg University and Michael Schmuker.

NEUROTECH partner Kirchhoff-Institute at Uni Heidelberg has published a new preprint reporting a successful application of their BrainScaleS-2 hardware to inference with Artificial Neural Networks (ANNs).

ANNs, such as deep convolutional neural networks (CNNs) that are very popular for tasks like image recognition, make heavy use of vector-matrix multiplication operations. These operations are computationally costly, and usually off-loaded to Graphics Processing Units (GPUs) that provide massive floating-point performance. Lately, digital hardware specifically tailored to this task has become available, like e.g. Google’s Tensor Processing Unit.

In contrast to these widely available digital solutions, analog accelerators promise superior energy efficiency and are therefore subject to active research all over the world.

One of these projects is the BrainScaleS-2 neuromorphic system. Although it has been designed primarily with the emulation of spiking neural networks in mind, its analog synapse array and analog neuron circuits can also be used to process vector-matrix multiplications. Multiplication is carried out inside the synapse array, while the results are accumulated, in an analog fashion, on the neurons' membrane capacitors. However, with analog signals, trial-to-trial variations arise, and manufacturing imperfections directly impact behaviour, distorting the results of the operation in a fixed manner. In anticipation of this issue, the BrainScaleS-2 architecture contains numerous calibration parameters that allow equalization of the circuits’ characteristics and mitigate manufacturing variations. Further compensation of these systematic deviations is achieved by training ANNs with hardware in the loop. In this setting, the weights of the ANN on the hardware are optimised by a training algorithm that runs on a host computer.

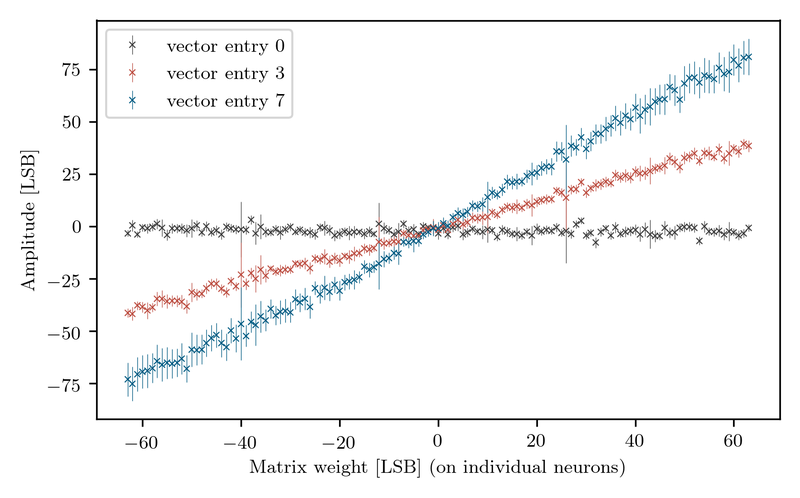

In their preprint, the BrainScaleS-team describes how they first characterize the vector-matrix-multiplication operation with an artificial test matrix. The plot below shows the result of that multiplication for a range of matrix and vector values which exhibits the expected linear behaviour, and also the systematic errors that result from analog computation. Having demonstrated that multiplication as well as accumulation of the results, both negatively and positively signed, works within desired parameters, they proceeded to test their hardware on the MNIST handwritten digits dataset, which is arguably the most popular test for CNNs .

Using one convolutional layer and two dense layers, they achieved an accuracy of 98.0 % after re-training the network with hardware in the loop, almost matching the same network’s performance evaluated on CPU.

In summary, the BrainScaleS-2-team has successfully demonstrated that their system can perform CNN operations at reasonable accuracy.

At a power consumption of only 0.3 W and 12 mJ per classified image, a BrainScaleS-2 chip can be used as a neural network processor for edge computing. However, its scalable architecture also allows an increase of energy efficiency for heavy workloads, like in datacenters.

The preprint has been published on ArXiv at https://arxiv.org/abs/2006.13177.

Characterization of analog multiplication. Matrix weights as well as vector entries linearly affect the result. The matrix was configured such that weights increased from a value of -63 in the leftmost column to +63 in the 127th synapse column with an increment of one. Within each column, all weights were set to the same value. Three constant input vectors were injected, each consisting of 128 entries of 0, 3 and 7. Error bars indicate the standard deviation within 30 runs.